Back in 2015, I worked with the IBM Watson Analytics team to promote the idea of the citizen analyst, the average citizen who could do data analysis in support of causes they care about. The concept was solid: given the right tools, people could help understaffed and underfunded causes make the most of data that was publicly available.

Unfortunately, this vision never came to fruition. Not because people don’t care or the tools were bad, but because the data that most causes and organizations work with is incredibly messy. Messy, badly structured data is difficult to work with, and the average citizen analyst isn’t going to have the time or the background to clean up the data in order to make use of it, even for causes that they’re incredibly passionate about.

I recently took a look at one such data problem. As I write this, it’s Pride Month, a celebration and recognition of the importance of equal rights for everyone, regardless of sexual orientation or gender identity. I had a data question I wanted an answer to, a puzzle to solve:

How prevalent are hate crimes against LGBTQIA+ people in the United States?

One would think, in this era of perpetual surveillance and instant communication, that this would be a simple, easily-obtained answer. Quite the contrary: in fact, the answer requires a lot of data detective work. However, if we do the preparatory work, the end result should be a dataset that any citizen analyst could pick up and use within everyday spreadsheet software to perform their own analysis.

Let’s walk through the process, including some functional code you’re welcome to copy and paste (or grab the entire code/notebook from our Github repository) to find answers.

Data Sources

First, we need to know where to look. Crime data is compiled by the US Department of Justice and the Federal Bureau of Investigation’s Uniform Crime Reporting system. That’s a logical place to start, and indeed, the FBI has a special portal set up for citizens to obtain hate crime data. However, this data by itself is flawed; in many states, either no specific legislation exists to count crimes with bias against LGBTQ people as hate crimes, or police departments are not required to report such crimes in their reporting to the FBI.

Other organizations, such as the Human Rights Campaign, do gather supplementary data, so we’d need to dig into what those organizations have to offer.

Finally, we do have access to social media and news data, which can be used to further supplement and add context to our project dataset.

This is a data preparation script necessary to handle LGBTQIA+ hate crime data along with supplementary data for public use. The intended purpose of this data is to make it easier for those researching hate crimes against LGBTQIA+ populations to have a single, normalized table of data to work with for correlation, modeling, etc.

The source data is sourced from a variety of places; each source is noted and hyperlinked in the notebook for independent verification.

This code is released under the GNU General Public License. Absolutely no warranty or support of any kind is included. Use at your own risk.

Prerequisites

These lines tell the programming language R (which is what this project was built in) to load specific libraries that help with the data processing.

library(here)

library(janitor)

library(tidyverse)

library(summarytools)Hate Crimes Data File

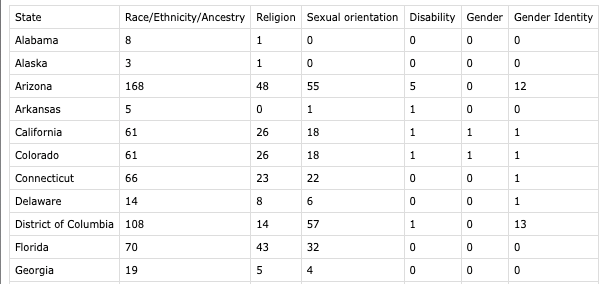

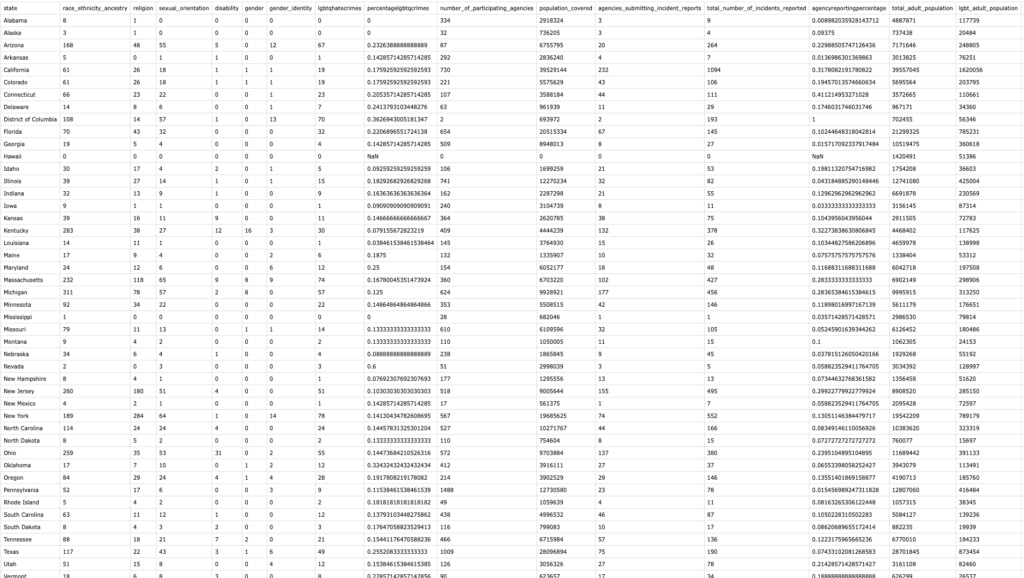

The data from this file is sourced from the FBI Uniform Crime Reports, 2017. FBI data is really, really messy. In order to collate this information, you have to download each state’s report as individual spreadsheet files and manually copy and paste the summary data into a single table.

Note that according to the FBI, the state of Hawaii does not participate in any hate crime reporting, so all Hawaii data will be zeroes.

We’ll also feature engineer this table to sum up both sexual orientation and gender identity as an “LGBTQIA+ Hate Crime” column, plus provide a percentage of all hate crimes vs. LGBTQIA+ Hate Crimes.

hatecrimesdf <- read_csv("hatecrimesgeo.csv") %>%

clean_names() %>%

mutate(lgbtqhatecrimes = sexual_orientation + gender_identity) %>%

mutate(

percentagelgbtqcrimes = lgbtqhatecrimes / (

race_ethnicity_ancestry + religion + sexual_orientation + disability + gender + gender_identity

)

)Parsed with column specification:

cols(

State = [31mcol_character()[39m,

`Race/Ethnicity/Ancestry` = [32mcol_double()[39m,

Religion = [32mcol_double()[39m,

`Sexual orientation` = [32mcol_double()[39m,

Disability = [32mcol_double()[39m,

Gender = [32mcol_double()[39m,

`Gender Identity` = [32mcol_double()[39m

)State Population Data and Reporting

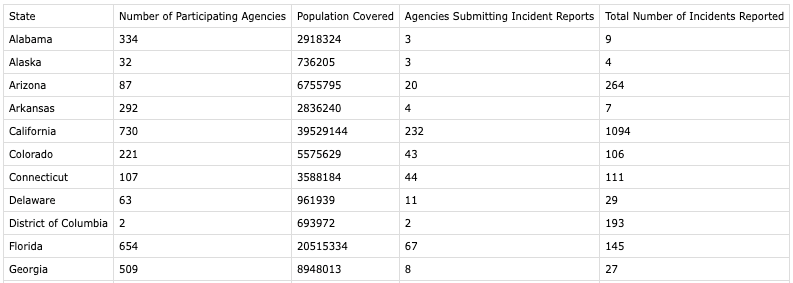

In order to make any kind of decisions about frequency, we need to take into account the overall population, number of police agencies, etc. This data file also comes from the FBI.

We’ll also feature engineer this table to add a percentage of agencies reporting incidents. This is important because underreporting is a known issue with hate crimes in particular.

hatereportingdf <- read_csv("hatecrimereporting.csv") %>%

clean_names() %>%

mutate(agencyreportingpercentage = agencies_submitting_incident_reports / number_of_participating_agencies)Parsed with column specification:

cols(

State = [31mcol_character()[39m,

`Number of Participating Agencies` = [32mcol_double()[39m,

`Population Covered` = [32mcol_double()[39m,

`Agencies Submitting Incident Reports` = [32mcol_double()[39m,

`Total Number of Incidents Reported` = [32mcol_double()[39m

)LGBTQIA+ Population Estimates

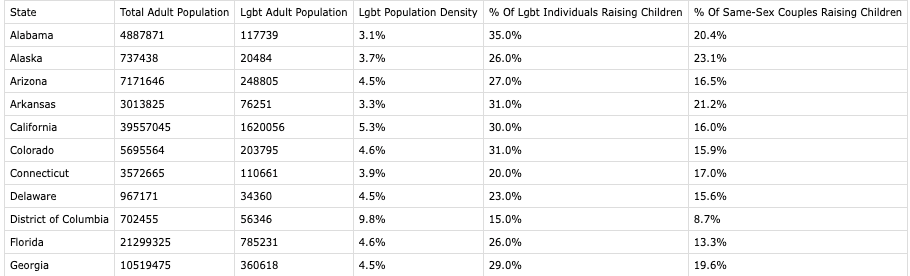

To better understand reporting of crimes, we need to know the density of the population. What percentage of the population identifies as LGBT per state? This data comes from the Movement Advancement Project and is 2018 data, so slightly newer than the FBI data.

We’ll feature engineer the character vectors to become numbers.

lgbtpopdf <- read_csv("maplgbtpopulations.csv") %>%

clean_names() %>%

mutate(lgbt_population_density = as.numeric(sub("%", "", lgbt_population_density)) /

100) %>%

mutate(percent_of_lgbt_individuals_raising_children = as.numeric(sub(

"%", "", percent_of_lgbt_individuals_raising_children

)) / 100) %>%

mutate(percent_of_same_sex_couples_raising_children = as.numeric(sub(

"%", "", percent_of_same_sex_couples_raising_children

)) / 100)Parsed with column specification:

cols(

State = [31mcol_character()[39m,

`Total Adult Population` = [32mcol_double()[39m,

`Lgbt Adult Population` = [32mcol_double()[39m,

`Lgbt Population Density` = [31mcol_character()[39m,

`% Of Lgbt Individuals Raising Children` = [31mcol_character()[39m,

`% Of Same-Sex Couples Raising Children` = [31mcol_character()[39m

)LGBTQIA+ Legal Protection

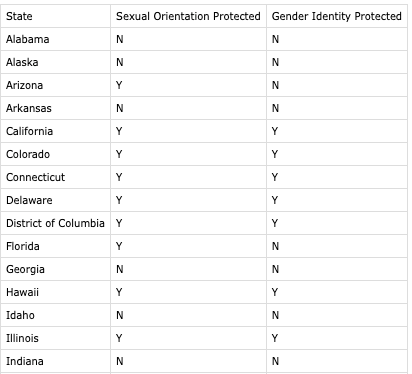

To see the big picture, let’s look at which states offer protection against hate crimes for both sexual orientation and gender identity. This data also comes from the Movement Advancement Project.

For machine learning purposes, we’ll also one-hot encode these values (in the source data as Y/N fields) as 0s and 1s.

legaldf <- read_csv("lgbtqlegalprotection.csv") %>%

clean_names() %>%

mutate(

sexual_orientation_protected_num = case_when(

sexual_orientation_protected == "Y" ~ 1,

sexual_orientation_protected == "N" ~ 0

)

) %>%

mutate(

gender_identity_protected_num = case_when(

gender_identity_protected == "Y" ~ 1,

gender_identity_protected == "N" ~ 0

)

)Parsed with column specification:

cols(

State = [31mcol_character()[39m,

`Sexual Orientation Protected` = [31mcol_character()[39m,

`Gender Identity Protected` = [31mcol_character()[39m

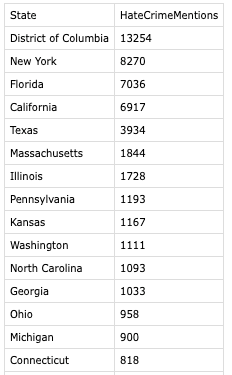

)Conversations About Hate Crimes

One area that’s extremely difficult to measure is just how bad underreporting of hate crimes is. How do we determine the prevalence of anti-LGBTQIA+ hate crimes, especially in places where no legal protections exist and agencies aren’t required to report such data to the FBI?

One potential way to assess this would be to bring in social media conversations and content, geographically located, about specific hate crimes. We pulled data from the Talkwalker media monitoring platform to assess what kinds of news reports might exist about hate crimes; this query filters out high-frequency but largely irrelevant content about hate crimes in general, but not specific reports:

((“LGBT” OR “LGBTQ” OR “gay” OR “bisexual” OR “transgender” OR “lesbian”) AND (“Hate Crime” OR “Attacked” OR “attack” OR “assault” OR “murder” OR “rape” OR “charged” OR “indicted” OR “guilty”) AND (“police” OR “law enforcement”)) AND NOT (“Trump” OR “Smollett” OR “Putin” OR “Obama” OR “@realdonaldtrump” OR “Pence” OR “burt reynolds” OR “rami malek” OR “abortion” OR “bolsonaro” OR “Congress”)

Note that this data source’s time frame is July 5, 2018 – June 6, 2019 owing to limitations in what social tools can extract from social APIs.

Let’s bring this table in as well.

socialdf <- read_csv("talkwalkerhatecrimementions.csv") %>%

clean_names()Parsed with column specification:

cols(

State = [31mcol_character()[39m,

HateCrimeMentions = [32mcol_double()[39m

)One anomaly worth considering is that even with filtering, a substantial amount of content and conversation will always come from sources located in the District of Columbia.

What would you use this data for? Like the data about number of police agencies reporting, social media data could be used to weight and adjust the number of raw hate crimes reported in a region.

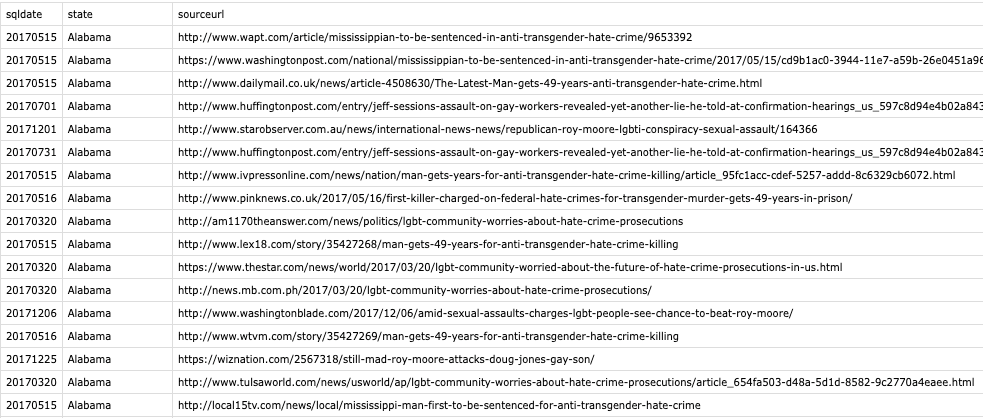

News About Hate Crimes

To further assist in counterbalancing the underreporting of hate crimes, we also extracted news articles from Google’s BigQuery database and the GDELT project that were tagged with a defined location within the United States, using the same query (minus SQL syntax variations) as our social media query:

select distinct sourceurl, sqldate, Actor1Geo_FullName, Actor2Geo_FullName from

gdelt-bq.gdeltv2.eventswhere (sourceurl like ‘%hate-crime%’ OR sourceurl like ‘%rape%’ OR sourceurl like ‘%murder%’ OR sourceurl like ‘%attack%’ OR sourceurl like ‘%assault%’ OR sourceurl like ‘%charged%’ OR sourceurl like ‘%indicted%’ OR sourceurl like ‘%guilty%’) and (sourceurl like ‘%lgbt%’ OR sourceurl like ‘%gay%’ OR sourceurl like ‘%gay%’ OR sourceurl like ‘%bisexual%’ or sourceurl like ‘%transgender%’ or sourceurl like ‘%lesbian%’) and (sourceurl NOT LIKE ‘%trump%’ AND sourceurl NOT LIKE ‘%Trump%’ AND sourceurl NOT LIKE ‘%pence%’ AND sourceurl NOT LIKE ‘%smollett%’ AND sourceurl NOT LIKE ‘%obama%’ AND sourceurl NOT LIKE ‘%putin%’ AND sourceurl NOT LIKE ‘%abortion%’ AND sourceurl NOT LIKE ‘%malek%’ AND sourceurl NOT LIKE ‘%congress%’ AND sourceurl NOT LIKE ‘%bolsonaro%’ AND sourceurl NOT LIKE ‘%abbott%’) AND (Actor1Geo_FullName like ‘%, United States%’ OR Actor2Geo_FullName like ‘%, United States%’) and (sqldate >= 20170101 and sqldate <= 20171231)

This datafile comes in with individual lines including dates and such, so let’s clean and prepare it. It starts out with 2,782 lines, which need to be consolidated down to counts in order to be merged into our main table later on.

The end result dataframe will be a list of states and the number of stories in 2017 for that state.

newsdf <- read_csv("googlenewshatearticles2017.csv") %>%

clean_names() %>%

arrange(state) %>%

distinct()Parsed with column specification:

cols(

sqldate = [32mcol_double()[39m,

State = [31mcol_character()[39m,

sourceurl = [31mcol_character()[39m

)newsdf$state <- as.factor(newsdf$state)

newsstoriesdf <- newsdf %>%

transmute(state) %>%

group_by(state) %>%

tally() %>%

rename(news_stories_count = n)

Note that any organization that wishes to make use of this data can manually inspect and discard individual news URLs in the source file, should they be so inclined.

Putting the Pieces Together

We now have six different tables from different data sources. While valuable individually, we could do much more if we had them all together. So let’s do that using a series of joins.

We’ll also write a copy of the table as a CSV for easy import into software like Excel, Google Sheets, etc. so that any citizen analyst can pick up the table and work with a clean, consolidated dataset.

joindf <- left_join(hatecrimesdf, hatereportingdf, by = "state")

joindf <- left_join(joindf, lgbtpopdf, by = "state")

joindf <- left_join(joindf, legaldf, by = "state")

joindf <- left_join(joindf, socialdf, by = "state")

joindf <- left_join(joindf, newsstoriesdf, by = "state")Column `state` joining character vector and factor, coercing into character vectorFeature Engineering

Because high population states inevitable skew absolute numbers of anything, we’ll want to account for that by doing a calculation of LGBTQIA-specific hate crimes per capita. We can do this now because we’ve joined all our data from disparate tables together. From the MAP data, we have the LGBTQIA+ adult population, and we have the LGBTQ hate crimes data from the FBI.

We’ll also engineer a feature for LGBTQIA+ hate crimes per capita for LGBTQIA+ populations. This insight, a ratio of hate crimes per LGBTQIA+ adult person, helps us understand the level of intensity of hate crimes against the LGBTQIA+ population specifically.

joindf <- joindf %>%

mutate(lgbtqcrimespercapita = lgbtqhatecrimes / population_covered) %>%

mutate(lgbtqcrimesperlgbtqcapita = lgbtqhatecrimes / lgbt_adult_population)What aren’t we doing in feature engineering? There are any number of combinations and assumptions we could write into the code, such as a weight or estimator of the actual number of hate crimes, based on news reports, social media posts, etc. but those assumptions require independent verification, which is outside the scope of this project.

Let’s now save our data.

write_csv(joindf, "hatecrimessummarytable.csv")This data file is what a citizen analyst could pick up and use to make further analysis.

Download the final CSV file from the Github repository.

In Support of Citizen Analysts

If data scientists and analysts were able to clean and prepare more data to be easily analyzed, I suspect we could still realize the vision of the citizen analyst. I wouldn’t expect any average citizen to come home from work and willingly clean and process the more than 50 spreadsheets from 6 data sources as we had to with this project. But any interested citizen could take the cleaned, prepared, engineered dataset and use it as a launch point for their own investigations.

In Support of the LGBTQIA+ Community

We hope this data detective work provides useful starting points for further inquiries by relevant LGBTQIA+ support organizations like the Human Rights Campaign, the United Nations, and law enforcement. All the data and code is available for free on our GitHub repository. We urge citizens in the US, if they live in states where data collection of hate crimes is underreported, to petition their elected officials for improved data collection. Liberty and justice for all begins with accurate data that informs and guides policy and law.

Special Thanks

We would like to extend our thanks to:

- The FBI and Department of Justice for providing uniform crime reporting data,

- The Movement Advancement Project

- Talkwalker, Inc.

- The GDELT Project, part of Google Jigsaw

- Dr. Tammy Duggan-Herd

- Mark Traphagen

|

Need help with your marketing AI and analytics? |

You might also enjoy:

|

|

Get unique data, analysis, and perspectives on analytics, insights, machine learning, marketing, and AI in the weekly Trust Insights newsletter, INBOX INSIGHTS. Subscribe now for free; new issues every Wednesday! |

Want to learn more about data, analytics, and insights? Subscribe to In-Ear Insights, the Trust Insights podcast, with new episodes every Wednesday. |

Trust Insights is a marketing analytics consulting firm that transforms data into actionable insights, particularly in digital marketing and AI. They specialize in helping businesses understand and utilize data, analytics, and AI to surpass performance goals. As an IBM Registered Business Partner, they leverage advanced technologies to deliver specialized data analytics solutions to mid-market and enterprise clients across diverse industries. Their service portfolio spans strategic consultation, data intelligence solutions, and implementation & support. Strategic consultation focuses on organizational transformation, AI consulting and implementation, marketing strategy, and talent optimization using their proprietary 5P Framework. Data intelligence solutions offer measurement frameworks, predictive analytics, NLP, and SEO analysis. Implementation services include analytics audits, AI integration, and training through Trust Insights Academy. Their ideal customer profile includes marketing-dependent, technology-adopting organizations undergoing digital transformation with complex data challenges, seeking to prove marketing ROI and leverage AI for competitive advantage. Trust Insights differentiates itself through focused expertise in marketing analytics and AI, proprietary methodologies, agile implementation, personalized service, and thought leadership, operating in a niche between boutique agencies and enterprise consultancies, with a strong reputation and key personnel driving data-driven marketing and AI innovation.