In this episode of In-Ear Insights, Katie and Chris deconstruct a graphic by Scott Brinker on different methodologies for evaluating and deploying marketing technology. What does waterfall marketing technology deployment look like? What does agile marketing technology deployment look like? Is Scott onto something, or is it a bad fit for an analogy?

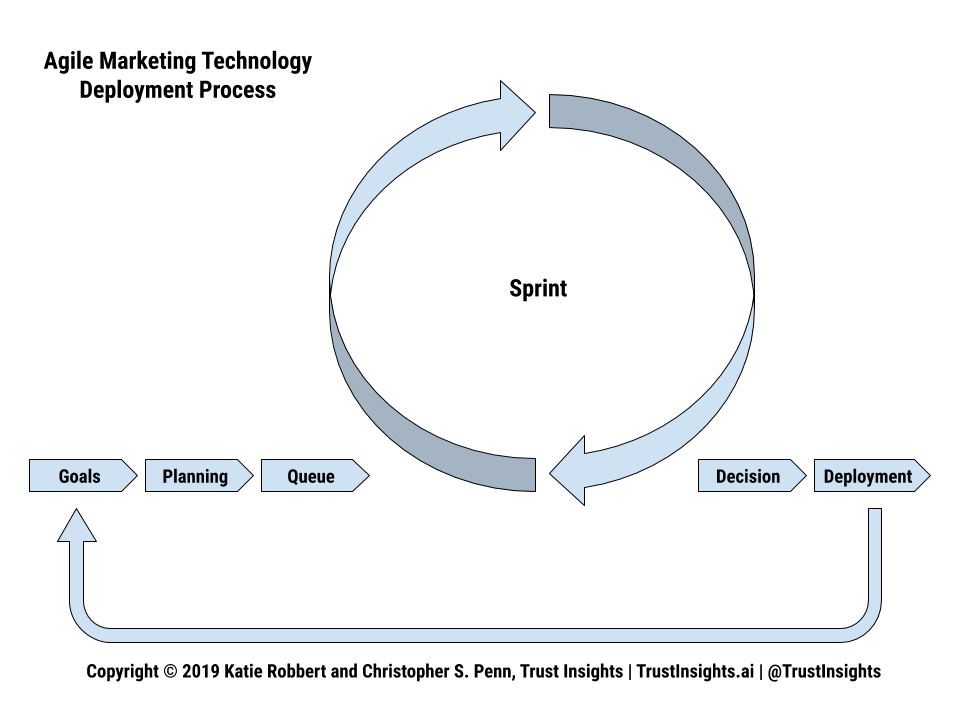

As discussed, here’s the graphic from this week’s episode:

[podcastsponsor]

Listen to the audio here:

Audio Player- Need help with your company’s data and analytics? Let us know!

- Join our free Slack group for marketers interested in analytics!

Machine-Generated Transcript

What follows is an AI-generated transcript. The transcript may contain errors and is not a substitute for listening to the episode.

Christopher Penn

In this week’s episode of In-Ear Insights, we are talking about requirements gathering, marketing, technology, agile marketing, and many, many others. So let’s start off by setting the table, our friend and colleague Scott Brinker over at Chief martech, also at HubSpot, and the I guess, the emerita of the martech conference posted a graphic on LinkedIn on his LinkedIn page back over the past weekend, we’re going to put a screenshot of that and a link to the post in the show notes. If you go to trust insights.ai, you’ll see that but it is a chart that says two different models of technology adoption where time is passing. One is waterfall, which in traditional software development typically means, you know, sort of a monolithic sequence development process. And it says gather requirements, evaluate options, plan, build and buy and then deploy. And then as a second one, which is the inverted pyramid or the correct I guess pyramid says agile where you buy the thing you use it you learn what it does he add more use cases and you expand to more users and teams. Now, this, to me sounds like kind of mixing messages a little bit because development methodology is versus technology adoption are not necessarily the same thing. But I also wanted to dig into, like, why what’s the whole logic behind? So Katie, you’ve done a obscene amount of development, management s DLC management and running dev teams. What do you make of this graphic?

Katie Robbert

I think it’s wrong. You know, and I think you’re absolutely I think you’re absolutely right in saying sort of fits the context, because it’s mixing messages. You, when you think about technology adoption, you’re thinking more about that bell curve of the early adopters, and sort of the laggards. And so if this is talking about technology, adoption, in the sense of bringing new software systems into your company, this isn’t necessarily the right way to go about it using the TLC isn’t really what you would be doing, you know, so you’re right waterfall is this step one process where it literally sort of looks like from the top down a set of steps where it’s like you start with requirements, and then you sort of move on and you can’t move up traditionally, you can’t move on to the next phase until you finish the first one. Whereas agile is meant to have things running concurrently in a more iterative way. And unfortunately, these graphs don’t necessarily reflect that waterfall does to an extent. But agile, though, just the graphic alone, if I was if I knew nothing else about it is not agile methodology. Because it’s still presenting it maybe visually in a in a very stepwise process. It’s just a matter of where you’re starting. And so if we’re talking about requirements gathering, which is where this started from, you still need to do that you still need to have a plan.

Christopher Penn

It almost seems to me that these things, the way the way this graph is presented is sort of they’re not to say separate things. Because in the, quote, agile one, having done agile marketing, actually having you know, seeing you implemented it in our previous company, it still requires things like having goals and having deadlines and having things that need to be accomplished, not just do something and hope it works out for the best, which is kind of what this graph summarizes. It says, you know, just buy the thing, use it, figure out what it does. And you know that that is okay for r&d, but not okay for production. But it seems like if you were to glue these things together, like gather requirements, evaluate plan, then buy the thing, then use it, learn what it is that and how it aligns to your plan. And then you know, that seems like more of the of what’s supposed to do because even though in Agile, you still have that that’s, you know, inside of a sprint, it’s like, okay, you still need to have a goal for the sprint, don’t

Katie Robbert

you do? It’s called sprint planning. And so you literally sit out even if it’s for five minutes, what’s the plan? And so I think that this graphic, I’m going to use the word irresponsible. So this graphic is just it’s not properly representing what agile really is, in this sense. And again, you know, it’s a context thing. So it’s not talking about software development lifecycle is talking about technology adoption, but in my personal opinion, it is an irresponsible thing to go ahead, buy software, plug it in, and say what is this button do? If you’re a company, because it’s going to cost you money, it’s going to cost you resources, and it might not be the right thing. So regardless, you still need some lightweight requirements gathering, you still need a plan, why are you buying the software. So again, sort of taking it just in the context of this visual where there’s a lot of missing information? I think that these are wrong,

Christopher Penn

it seems to me I can sort of see, get a sense of where this might be coming coming from. Because in in a world where freemium and SAS based models are, are sort of the standard, it does reduce the cost of switching or of canceling a project because you haven’t, you know, built you haven’t bought a million dollars worth of service to build up in a room. But at the same time, to your point, there is still the integration efforts, there’s still the connection effort. So if you’re, you’re plugging something, and we have had a number of clients, trust the insights clients who are like, Oh, yeah, we bought all these things, and we don’t know what to do with. So it’s like, yeah, and that that’s actually part of the foundation of one of our four core services, which is, Hey, what did you buy? It What happened?

Katie Robbert

Well, you know, it’s interesting, what, what’s really missing from this, and from, you know, when you talk about the TLC, and the overlap with technology adoption, is what agile really does is it allows for that proof of concept. And so it’s that smaller, bite sized chunk to see does this thing work. And then you, you can start to scale it out and integrate it to your point, which is when then you might start to get into more of the waterfall methodology, but agile, the easiest way to understand it is think about it like that sandbox or a proof of concept, you still have a goal you’re working towards you still have those guardrails of timeline and probably budget and resource hours. But you’re trying to do a very small thing to make an incremental movement and traditional agile Sprint’s are two weeks, sometimes three, depending on the project. But at the end of it, you have to have something to show. And in order to know what you’re going to show you have to back up and start with a goal or a plan. And so it’s really I think, for technology adoption, as well can work if applied properly. That is, you know,

Christopher Penn

that’s what I was thinking about looking at this is that I think not irresponsible. But I think it’s incorrect. If we look at the pieces of what’s in the waterfall side, you know, gather requirements, evaluate options, plan by deploy. They’re out of order. But I think if you could, you could based on what you just said, map that to an agile methodology. If you start with the planning, right? Then your sprint could be gathering requirements gathering, you evaluate your options and requirement just gathering for two weeks. And at the end of the two weeks. At that checkpoint, you do a builder by decision like, you know, do we have enough information to build or buy? Yes or no? If we don’t, then you start this the sprint cycle again. Okay, now, we we replan, we didn’t get enough information, we got the wrong information. Last time, the next sprint, let’s do another round of vendor evaluations. At the end of two weeks do that, you know, go no go decision. And eventually. So I think in a way, what you said earlier, the quote, agile one on this chart is still a waterfall. It’s just a waterfall in a backwards order. Whereas the Agile method would look very different. If you took the things that are on this chart and actually put it in the Agile methodology. Am I am I reading that correctly?

Katie Robbert

Yeah, so you know, and I think it’s visual that sort of is going to catch people up. So a better visual for agile methodology, if you think about like a race track, so it goes around in a circle. And then you might have different checkpoints within that race track. Or you might have, you know, different lanes within the race track is constantly going around constantly iterating. And then you might have a checkpoint here or two cars driving on the racetrack at the same time. But they can both exist on the same racetrack because they’re not overlapping with each other because they have different checkpoints, different goals. Whereas waterfall, if you think about it going like from the top down, like a staircase, you can’t have more than one thing at a time, because it’s just going to bump into each other.

Unknown Speaker

It’s like anyways,

Katie Robbert

right? Yeah, exactly. So that’s, that is really the the nice thing about agile is it allows you to do these iterative projects, you know, maybe you do evaluate for two weeks. You know, I think the other part of it is that, you know, the article that was referenced as the end of requirements gathering, I think there’s a misunderstanding with what that really means, you know, the traditional old school requirements gathering meant sitting down with everybody in a room for weeks at a time documenting out everything under the sun, that’s not necessarily true, you don’t have to do that in order to understand what you’re working towards. The simplest way to start is, what’s the problem we’re trying to solve? And just, you know, it could be a broad stroke thing that you then break down into smaller milestones. Or it could just be a simple, you know, what we need to get better data on X. Okay, great.

Christopher Penn

Those are your requirements. Is there such a thing as as agile requirements gathering in the sense of having those sprints and scrums? where you are? You’re getting the pieces iterative Lee, rather than trying to do it all at once, you know, like you said, the traditional meeting way is 42 stakeholders in a room and everyone argues five hours and nothing gets done.

Katie Robbert

Yeah, absolutely. And that’s very much how it works. And so if you’re in product development, for example, you might, you might start off with sort of that high level, sit down with those 43 people in a room, but you cut it at an hour. And you say, give me all the information you want. And then a product manager might go back, you know, go through all the information and then bring that to the development team and say, here’s what we’re working with, let’s start planning out. based on what we know about this so far are different sprints. And then within each of those sprint planning sessions, is when you really dig into the requirements gathering, because your stakeholders aren’t necessarily going to know the ins and outs of how this thing works. They just know what they want it to do at the end. And those are two very different things.

Christopher Penn

Is there such a thing? Or could there be such a thing where you would use sort of like a like a version in version control system or code, check out check and check on system, Jackie, for the requirement gathering where different people can all work on the requirements together, because I could see, for example, in marketing, technology, marketing is going to be involved. But it has to be involved. At some point, if you want to have sustainable governance and not get hacked at some point, because you have a rogue system somewhere, finance is probably going to be involved because somebody’s got to write the check for the thing at some point. And that’s that’s unavoidable. Given all the different participants, like you were saying lanes on a racetrack, could you have them all working in an environment where instead of having that five hour sit down meeting, everybody just participates in a system together? And maybe you get the requirements gathering done a little faster?

Katie Robbert

Yeah, I mean, the way that requirements gathering done doesn’t require people to physically sit in the room with a notebook and copy down like frantically scribbling notes of what everybody’s yelling at you, there’s a bunch of different ways to do it, you know, in the in the example that you’re describing, I mean, those are sort of those virtual team member, you know, workspaces. And, you know, you still have to have your pm sort of shepherding all the information, all in the same direction. But yeah, there’s no reason why you can’t have multiple people working in a system that has a version control. But again, that all comes from a plan, you can’t just throw everybody into an environment and say, have at it, you need, you still need to have a plan or some semblance of governance of the environment, so that you have some ground rules of, you know, not overriding somebody else’s work, or which system are we going to use that everybody knows how to use if people don’t know how to use it? How do we get people up to speed? So I guess the bottom line is, you just can’t bypass planning and requirements gathering. It doesn’t have to be, you know, this laborious, cumbersome, unwieldy thing. But you still have to do it, right? To be successful. You can skip it, if you want to skip it. But I guarantee you will waste money in time if you skip it.

Christopher Penn

Now, is that also true for r&d? where it’s like, okay, I just want to learn what this thing does. I don’t even know what this thing does. I want to learn what this thing does. Like, I find that when we’re doing this with our machine learning, for Trusted Sites, it sometimes it is, hey, here’s the thing, I read about this thing, I saw this thing in a magazine or whatever, I want to try it out and eat and understand even what it does. Unfortunately, most of the the proof of concept stuff we work with is is open source or its pay as you go like with IBM Watson studio. So there’s not a massive lost financial costs, this is the time cost to it. But what about r&d processes? Do you still have some of this without above and beyond in a more less formal way? I mean, obviously, you still have to have some kind of goal, like a not just I want to learn this thing does because it’s cool toy?

Katie Robbert

Well, I mean, I think you just kind of answered your own question. You know, you still Yes, it’s a little bit less formal. But it also It depends, because it depends on how each company is structured. So if you only are given an hour a week, on company time to do r&d, then you’d better make account. So you need to go in with some kind of a plan. Whereas if you just sort of have unlimited time and resources, yeah, you can do whatever you feel like it. But I would imagine at some point you want it to be have some kind of an output and be meaningful. And that requires having some kind of some semblance of a plan, some semblance of what question my trying to answer with this thing. And so to your point, no, there may not be physical expenses, but somebody time is still money.

Christopher Penn

Right? That makes sense. Unless you are a pure r&d organization that does nothing else.

Katie Robbert

But in that sense, I guarantee there are still ground rules and guidelines, saying, you know, you get 10 hours a week on this task, 10 hours a week on this task. Here’s the goal. You know, here’s what we’re trying to find out through our end. So if you think about, you know, a research grant, for example, you still have a hypothesis, you still have something that you’re working towards every day in order to demonstrate this thing is true or not true. And so you still have to do the planning requirements, gathering specs, all of that stuff. But just because it’s a research grant, and it’s pure r&d, doesn’t mean you got to skip all that stuff.

Christopher Penn

Right. Okay. So I think, you know, it’d be interesting as maybe we should cut our own version of this graphic, where we use the Agile framework, you know, the little the concentric circles of planning and square and scrubs and sprints and and remix it, maybe we’ll put that up over on the Trusted Sites blog, because I think it would be a good, a good way to look at this, using the actual agile methodology framework that is well proven and well tested. And say, like, technology adoption, is like anything else, you have to you still have to have a goal, you still have to have a process by if you want to be able to repeat the successes that you’ve had in one way or the other. What else? What else? should someone who’s seeing this kind material be thinking about in terms of repeatable successes?

Katie Robbert

Um, you know, if, if I were looking at this, for the first time, I would start to do my own due diligence to start to understand what truly are waterfall and agile methodologies? And how does it apply here? And so I think that what someone should be thinking about is, which of these methodologies makes the most sense for me, for my team for my company? You know, am I trying to do software development? Am I trying to do technology adoption? If I’m trying to do technology adoption? You know, what is that? Really? What does that really entail? What’s the goal? Who needs to be involved? The thing that’s not reflected on here on these graphics, necessarily, is the people. And so you know, you might, you might come to me one day and say, Hey, I found this really cool tool. It You know, it slices and dices. And Julian’s it makes you coffee, and it will solve all the world’s problems. And I might just be a hard headed, stay cold and say, No. Well, where’s that on your chart?

Christopher Penn

Right? So risk management and risk mitigation. Yep. And just although we’ve had more discussions, you know, at the company of, exactly why do we need this? What is? What’s it supposed to do? Like the GBT to stuff with generating natural language is good. There’s a use case for it. But we’ve never actually sat down and tried to build a product around it. I think that’s the other thing that is interesting here is that technology adoption is sort of like taking an existing product and trying to figure out what to do with it. Whereas figuring out like, hey, there’s a technology, not a product, but a technology, should we be using it this also doesn’t necessarily answer that.

Katie Robbert

Right. And I think, you know, it’s interesting, the more I look at this, the more I’m you know, picking it apart, but the Agile graphic, there’s an implication that you’ve already done the planning and a goal. And so it’s just not reflected on here because it doesn’t fit nicely into, you know, agile being faster and more cost efficient. You know, so I, again, I feel like there’s some missing information. And I like the idea that, you know, we should probably come up with our own version of it

Christopher Penn

right now. Who knows, maybe we’ll show it off at the martek East conferences, we will be there. So, as always, if you have not already, please go to trust insights.ai and find the podcast and subscribe to it. If you happen to be just listen to us on the web page, hit the subscribe button, and subscribe to the YouTube channel and the newsletter as well. We’ll talk to you next time. Thanks for listening to in your insights, leave a comment on the accompanying blog post if you have follow up questions or email us at marketing at trusty insights.ai. If you enjoyed this episode, please leave a review on your favorite podcast service like iTunes, Google podcasts stitcher or Spotify. And as always, if you need help with your data and analytics, visit trust insights.ai for more

|

Need help with your marketing AI and analytics? |

You might also enjoy:

|

|

Get unique data, analysis, and perspectives on analytics, insights, machine learning, marketing, and AI in the weekly Trust Insights newsletter, INBOX INSIGHTS. Subscribe now for free; new issues every Wednesday! |

Want to learn more about data, analytics, and insights? Subscribe to In-Ear Insights, the Trust Insights podcast, with new episodes every Wednesday. |

Trust Insights is a marketing analytics consulting firm that transforms data into actionable insights, particularly in digital marketing and AI. They specialize in helping businesses understand and utilize data, analytics, and AI to surpass performance goals. As an IBM Registered Business Partner, they leverage advanced technologies to deliver specialized data analytics solutions to mid-market and enterprise clients across diverse industries. Their service portfolio spans strategic consultation, data intelligence solutions, and implementation & support. Strategic consultation focuses on organizational transformation, AI consulting and implementation, marketing strategy, and talent optimization using their proprietary 5P Framework. Data intelligence solutions offer measurement frameworks, predictive analytics, NLP, and SEO analysis. Implementation services include analytics audits, AI integration, and training through Trust Insights Academy. Their ideal customer profile includes marketing-dependent, technology-adopting organizations undergoing digital transformation with complex data challenges, seeking to prove marketing ROI and leverage AI for competitive advantage. Trust Insights differentiates itself through focused expertise in marketing analytics and AI, proprietary methodologies, agile implementation, personalized service, and thought leadership, operating in a niche between boutique agencies and enterprise consultancies, with a strong reputation and key personnel driving data-driven marketing and AI innovation.