This data was originally featured in the May 8th, 2024 newsletter found here: INBOX INSIGHTS, MAY 8, 2024: BEING DATA-DRIVEN, AI HALLUCINATIONS

In this week’s Data Diaries, let’s discuss generative AI hallucination, especially in the context of large language models. What is it? Why do tools like ChatGPT hallucinate?

To answer this question, we need to open up the hood and see what’s actually happening inside a language model when we give it a prompt. All generative AI models have two kinds of memory – latent space and context windows. To simplify this, think of these as long-term memory and short-term memory.

When a model is built, vast amounts of data are transformed into statistics that become the long-term memory of a model. The more data they’re trained on, the more robust their long-term memories – at the expense of needing more computational power to run. A model like the one that powers ChatGPT requires buildings full of servers and hardware to run. A model like Meta’s LLaMa 3 model can run on your laptop.

The bigger a model is, the more knowledge it has in its long-term memory; that’s why huge models like Anthropic’s Claude 3 and Google Gemini 1.5 tend to hallucinate less. They have bigger long-term memories.

When you start prompting a model, you’re interacting with its short-term memory. You ask it something, or tell it to do something, and like a librarian, it goes into its long-term memory, finds the appropriate probabilities, and converts them back into words.

In fact, a librarian working in a library is a great way to think about how models work. Except instead of entire books, our librarian is effectively retrieving words, one at a time, and bringing them back to us. Imagine a library and a librarian who just write the book for you, and that’s basically how a generative AI model works.

But what happens if you ask it for knowledge it doesn’t have? Our conceptual “librarian” grabs the next nearest book off the shelf – even if that book isn’t what we asked for. In the case of generative AI, it grabs the next nearest word, even if the word is factually wrong.

Let’s look at an example. Using Meta’s LLaMa 8B model – the one that can run on your laptop, so it doesn’t have nearly as big a reference library as a big model like ChatGPT – I’ll ask it who the CEO of Trust Insights is:

The model returned the wrong answer – a hallucination: “According to my knowledge, the CEO of Trust Insights is Chuck Palmer.”

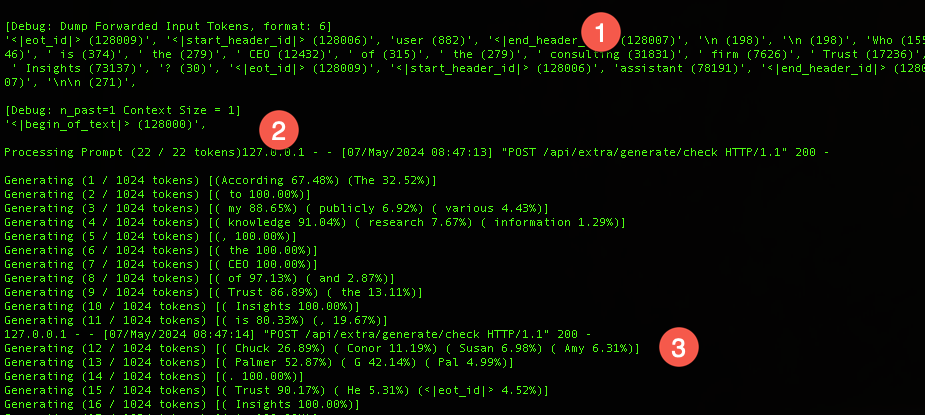

When we look at what’s happening under the hood, we see the initial query is turned into numbers (point 1 in the image above). The “librarian” goes into the long-term memory (point 2), and then comes back with its results. Look at the name selection at point 3:

Generating (12 / 1024 tokens) [( Chuck 26.89%) ( Conor 11.19%) ( Susan 6.98%) ( Amy 6.31%)]

Generating (13 / 1024 tokens) [( Palmer 52.87%) ( G 42.14%) ( Pal 4.99%)]What’s returned are the probabilities and their associated confidence numbers. The model KNOWS that it’s guessing. We don’t see this in the consumer, web-based interfaces of generative AI, but if you run the developer version like I am in this example, you can see that it’s just grabbing statistically relevant but factually wrong information. This is a hallucination – it grabbed the next nearest “book” off the shelf because it couldn’t find what we asked it for.

This is why it’s so important to use the Trust Insights PARE framework with generative AI. By asking a model what it knows, you can quickly diagnose – even in the consumer version – whether a model is going to hallucinate about your specific topic. If it is, then you know you’ll need to provide it with the data. Check out what happens if I give it our About page as part of the prompt:

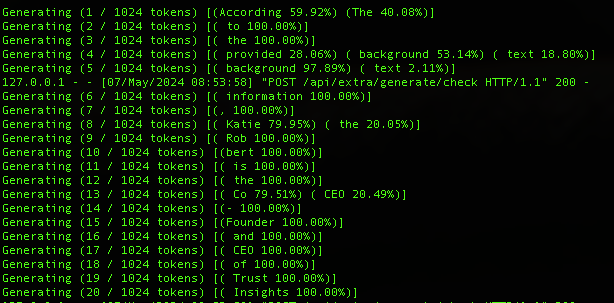

It nails it. Katie’s name is an 80% chance that it’s the correct word to return. Why only 80%? Because the model is weighing whether to return a slightly different sentence structure. Instead of saying “According to the provided background information, Katie Robbert is the Co-Founder and CEO of Trust Insights”, it was likely also considering, “According to the provided background information, the CEO of Trust Insights is Katie Robbert.” You can also see the percentages in the image above – there are many more 100% confidence percentages compared to the previous example.

So what? Use the PARE framework in your prompting to understand how well any AI system knows what you’re asking of it, and if it doesn’t know, you will need to provide the information.