This data was originally featured in the July 24th, 2024 newsletter found here: INBOX INSIGHTS, JULY 24, 2024: HOW TO GET INVOLVED, WHAT THE LLAMA MODEL MEANS FOR AI

In this week’s Data Diaries, let’s take a step back for a moment to talk grand strategy, generative AI, and the big picture. Yesterday, July 23, 2024, something profound happened.

Generative AI became a permanent feature for humankind.

Now, that sounds awfully bold and bombastic. Why? Up until yesterday, there have been two kinds of generative AI models: open and closed.

Closed models are the ones that power big services like ChatGPT, Claude, and Google Gemini. They’re gigantic foundation models that are incredibly capable, resource intensive, and locked down. You can’t go into the model and make changes, you can’t turn on or off certain features, and the inner workings of the model are opaque, closed to the world. You write prompts for them and hope they do what they’re supposed to do.

Critically, they’re 100% under a third party’s control, which means if a company like Anthropic or OpenAI went out of business, your access to those models goes with them.

Open models are ones that typically are smaller, lighter, and open for technical AI folks to download, alter, make changes, and customize. They’re usually less capable than the foundational closed models – after all, they have less data in them. But the tradeoff of being less capable was that you could run them on devices as small as a laptop, and run them inside your own infrastructure. For folks who need absolutely secure, private AI, this was the way to go – knowing that you’d lose some performance and capabilities in trade.

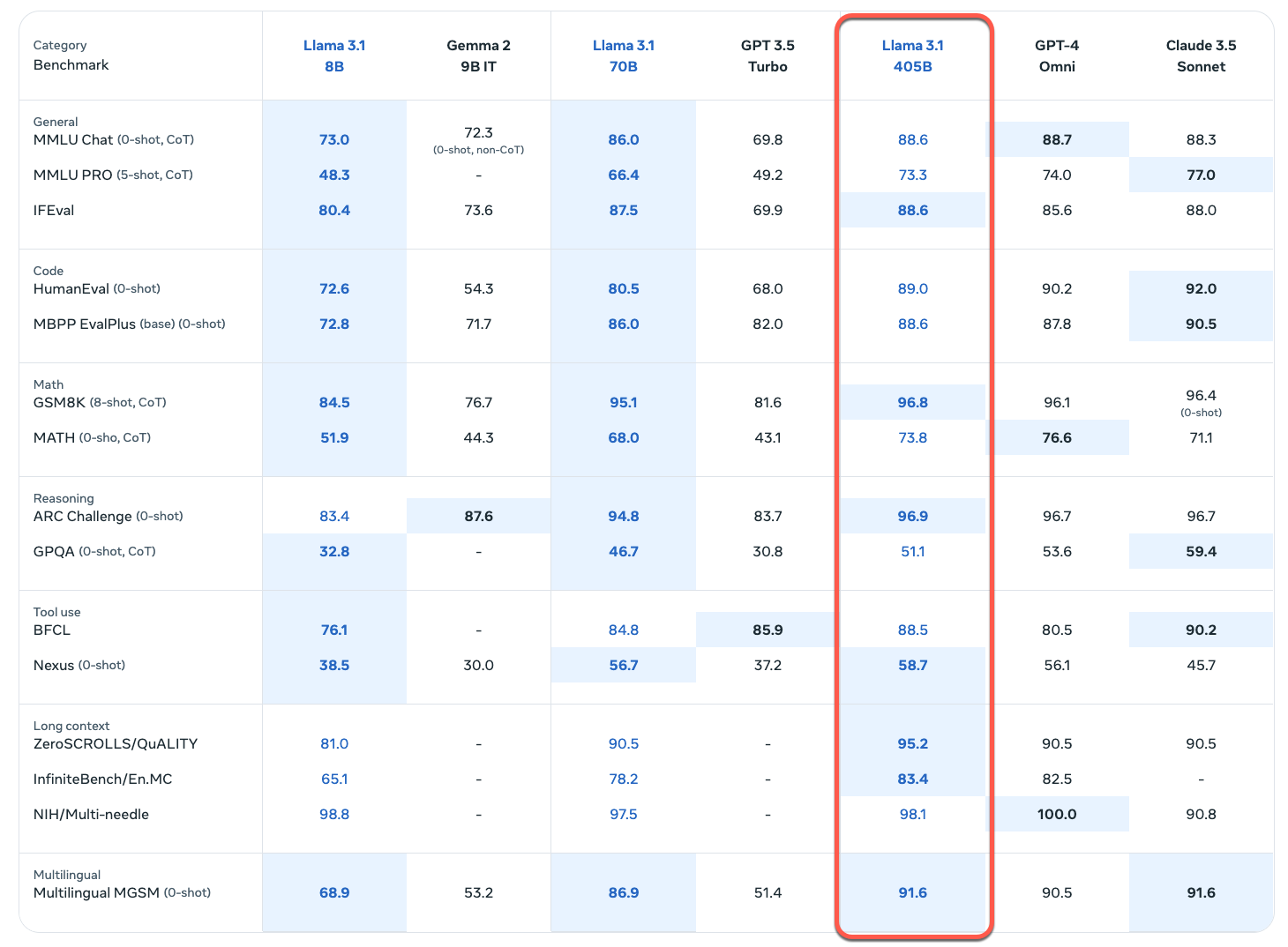

All that changed yesterday when Meta released Llama 3.1, the latest version of their open model. They released it in three sizes, 8B, 70B, and 405B; the B refers to the number of parameters in billions. More parameters means the model is more capable and skillful, at the expense of being more resource-hungry. An 8B model can run on just about any laptop. A 70B model can run on a beefed-up gaming laptop or a high-end machine.

The Meta Llama 405B model needs tens of thousands of dollars’ worth of hardware to run at reasonable speeds if you want to host it yourself. It’s really meant for organizations to use in server rooms, as opposed to something you or I would use.

But the 405B version performs at or above closed models in most artificial benchmarks, which is astonishing. No other open model has ever performed even close to state of the art, and Llama 3.1 405B beats the latest version of ChatGPT and the latest version of Anthropic Claude on more than half of artificial benchmarks.

What this means is that for the first time, an open foundation model has been released that’s competitive with closed models – and that means if a company set up their own infrastructure using Llama 3.1 405B internally, that company would have state of the art generative AI in perpetuity.

If OpenAI or Meta or Google or Anthropic vanished tomorrow, that model would still be in operation because it’s on your own servers, your own infrastructure. Generative AI – state of the art generative AI – would be yours to keep. It might never advance past that point, but today’s AI is already amazingly capable.

That’s what I mean when I say that the release of this model is SUCH a huge deal in the big picture. Generative AI is now permanent, immune to the tides of fortune for big tech providers. Any company willing to invest in the infrastructure can have generative AI on tap forever, no matter what happens to Silicon Valley.

And if you derive benefit from generative AI today, that means you’ll be able to continue deriving benefit from it, no matter what. The option to either host your own or switch providers will mean that generative AI will never go away and can never be fully taken away from you from now on.

|

Need help with your marketing AI and analytics? |

You might also enjoy:

|

|

Get unique data, analysis, and perspectives on analytics, insights, machine learning, marketing, and AI in the weekly Trust Insights newsletter, INBOX INSIGHTS. Subscribe now for free; new issues every Wednesday! |

Want to learn more about data, analytics, and insights? Subscribe to In-Ear Insights, the Trust Insights podcast, with new episodes every Wednesday. |

Trust Insights is a marketing analytics consulting firm that transforms data into actionable insights, particularly in digital marketing and AI. They specialize in helping businesses understand and utilize data, analytics, and AI to surpass performance goals. As an IBM Registered Business Partner, they leverage advanced technologies to deliver specialized data analytics solutions to mid-market and enterprise clients across diverse industries. Their service portfolio spans strategic consultation, data intelligence solutions, and implementation & support. Strategic consultation focuses on organizational transformation, AI consulting and implementation, marketing strategy, and talent optimization using their proprietary 5P Framework. Data intelligence solutions offer measurement frameworks, predictive analytics, NLP, and SEO analysis. Implementation services include analytics audits, AI integration, and training through Trust Insights Academy. Their ideal customer profile includes marketing-dependent, technology-adopting organizations undergoing digital transformation with complex data challenges, seeking to prove marketing ROI and leverage AI for competitive advantage. Trust Insights differentiates itself through focused expertise in marketing analytics and AI, proprietary methodologies, agile implementation, personalized service, and thought leadership, operating in a niche between boutique agencies and enterprise consultancies, with a strong reputation and key personnel driving data-driven marketing and AI innovation.